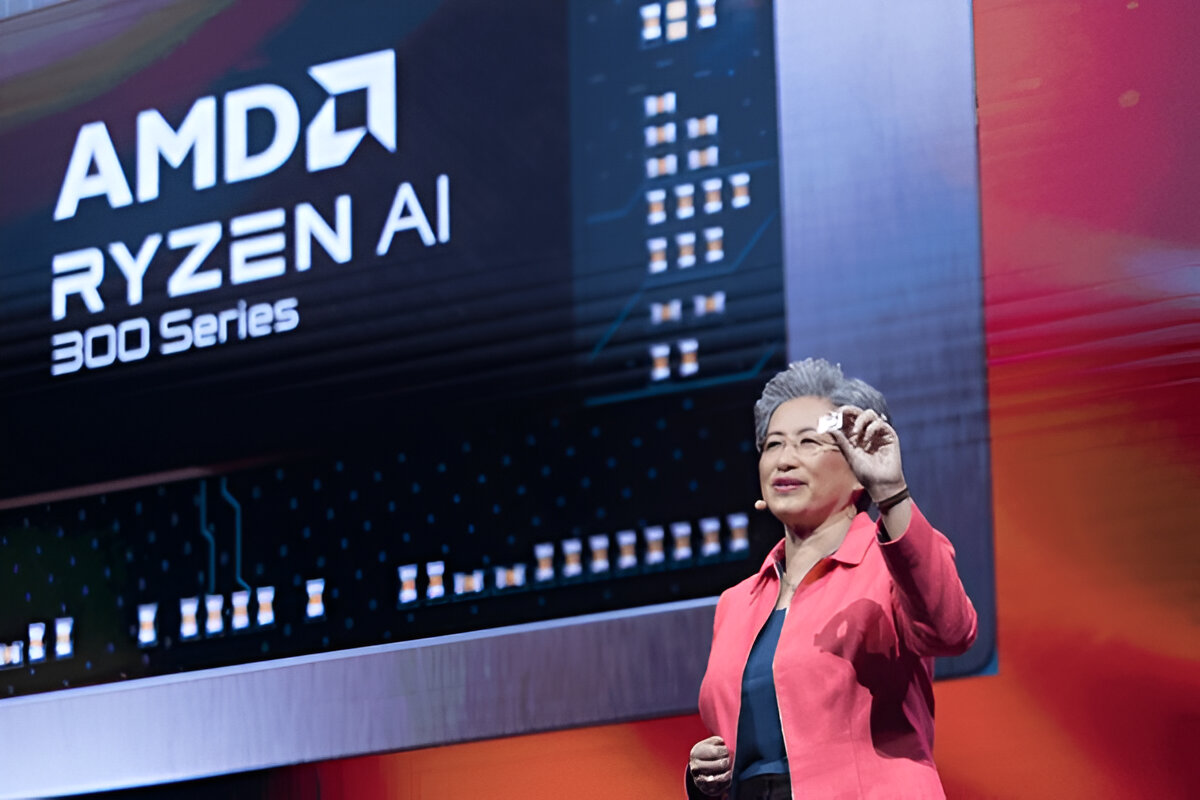

In a move that’s sending shockwaves through the tech world, AMD CEO Lisa Su has unveiled a new lineup of AI chips that could significantly shift the balance of power in the semiconductor industry. For years, Nvidia has dominated the artificial intelligence (AI) and machine learning market with its powerful GPUs, but AMD is now rewriting that narrative with innovation, performance, and timing that’s hard to ignore.

The Rise of AMD in the AI Race

Until recently, Nvidia held a virtual monopoly over AI and deep learning hardware, with its H100 and A100 chips powering everything from ChatGPT to Tesla’s Dojo supercomputer. However, AMD is quickly closing the gap. At the heart of this revolution is AMD’s MI300X accelerator, a game-changing AI chip that is already drawing serious attention from major cloud providers and enterprises.

The MI300X, built using chiplet architecture and CDNA 3 GPU technology, delivers massive performance per watt improvements over its predecessor. With 192GB of HBM3 memory, it caters directly to large language models (LLMs) like GPT, BERT, and other transformer-based architectures that require enormous memory bandwidth.

AI Workloads Are Changing—and AMD Is Ready

One of the biggest differentiators is AMD’s focus on scalable AI infrastructure. Instead of trying to beat Nvidia at its own game, Lisa Su’s team is addressing what customers actually need: energy-efficient, high-memory, AI-optimized systems that can scale across data centers.

With AI models growing larger by the day, memory capacity and throughput have become critical. AMD is leveraging this pain point to position the MI300X as a better alternative for training and inference of models at scale.

Moreover, AMD is working closely with open-source AI frameworks like PyTorch and TensorFlow, ensuring software-level support to ease the transition from Nvidia-based environments.

Lisa Su Breaks Silence

In her recent keynote, Lisa Su didn’t hold back. “AI is the defining technology of our generation, and we’re building the best possible solutions to meet that demand,” she declared. Su highlighted AMD’s vision of “open ecosystem collaboration,” directly contrasting Nvidia’s tighter software-hardware integration model.

This subtle jab has resonated with enterprise customers who want more vendor-agnostic, customizable AI systems. Lisa Su’s calculated and composed leadership style is proving to be AMD’s secret weapon. She doesn’t just talk about innovation—she quietly delivers it.

Why Nvidia Should Be Worried

Nvidia still dominates the AI GPU market—but that dominance is built on increasingly fragile foundations. Supply chain bottlenecks, rising chip costs, and growing customer frustration over dependency are making enterprises consider alternatives.

AMD’s aggressive pricing strategy and production scale have become major threats. Early adopters like Microsoft and Meta have reportedly tested AMD’s chips in pilot projects, and more announcements are expected soon.

If AMD can deliver consistent performance gains and better availability, Nvidia’s moat will shrink fast.

What This Means for the Tech Industry

This shift isn’t just about two chipmakers. The battle between AMD and Nvidia will determine how AI infrastructure evolves globally. More competition means:

Lower prices for cloud providers

Faster innovation cycles

More accessible AI compute for startups and researchers

AMD’s entry also diversifies the supply chain—something nations and businesses alike are desperately seeking amid geopolitical tensions and tech export controls.

Updates by TrendToday360

Leave a Reply